新闻

新闻

Trusted Uncertainty in Large Language Models: A Unified Framework for Confidence Calibration and Risk-Controlled Refusal

arXiv:2509.01455v1 Announce Type: new Abstract: Deployed language models must decide not only what to answer...

Trinity-RFT: A General-Purpose and Unified Framework for Reinforcement Fine-Tuning of Large Language Models

arXiv:2505.17826v2 Announce Type: replace-cross Abstract: Trinity-RFT is a general-purpose, unified and easy-to-use framework designed for...

TRIM: Token-wise Attention-Derived Saliency for Data-Efficient Instruction Tuning

arXiv:2510.07118v2 Announce Type: replace Abstract: Instruction tuning is essential for aligning large language models (LLMs)...

Transforming Wearable Data into Personal Health Insights using Large Language Model Agents

arXiv:2406.06464v3 Announce Type: replace-cross Abstract: Deriving personalized insights from popular wearable trackers requires complex numerical...

Transferring Expert Cognitive Models to Social Robots via Agentic Concept Bottleneck Models

arXiv:2508.03998v1 Announce Type: new Abstract: Successful group meetings, such as those implemented in group behavioral-change...

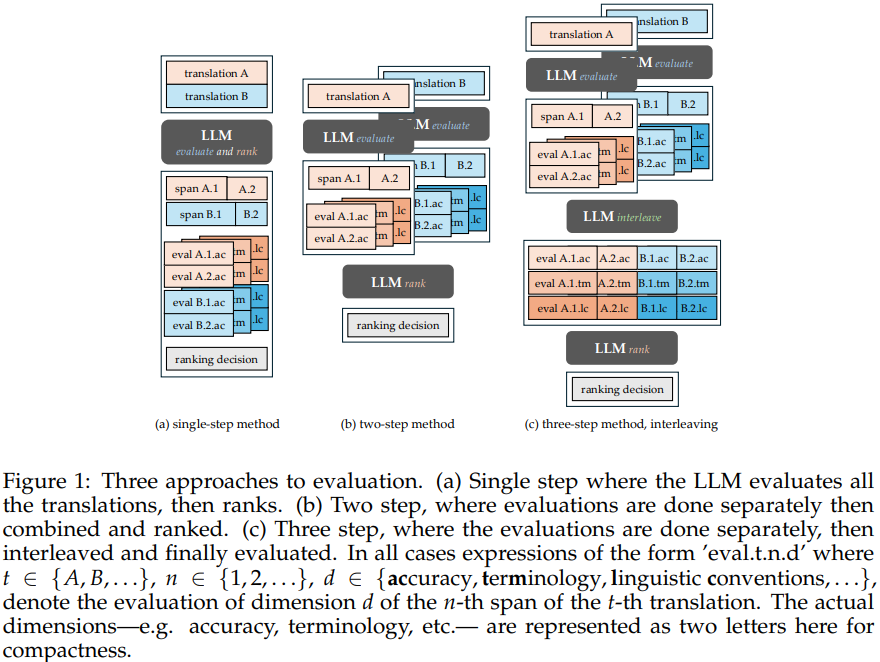

TransEvalnia: A Prompting-Based System for Fine-Grained, Human-Aligned Translation Evaluation Using LLMs

Translation systems powered by LLMs have become so advanced that they can outperform human translators...

Traits Run Deep: Enhancing Personality Assessment via Psychology-Guided LLM Representations and Multimodal Apparent Behaviors

arXiv:2507.22367v1 Announce Type: new Abstract: Accurate and reliable personality assessment plays a vital role in...

Training LLMs to be Better Text Embedders through Bidirectional Reconstruction

arXiv:2509.03020v1 Announce Type: new Abstract: Large language models (LLMs) have increasingly been explored as powerful...

Training Language Model Agents to Find Vulnerabilities with CTF-Dojo

arXiv:2508.18370v1 Announce Type: cross Abstract: Large language models (LLMs) have demonstrated exceptional capabilities when trained...

Training a Model on Multiple GPUs with Data Parallelism

This article is divided into two parts; they are: • Data Parallelism • Distributed Data...

Train Your Large Model on Multiple GPUs with Tensor Parallelism

This article is divided into five parts; they are: • An Example of Tensor Parallelism...

Train Your Large Model on Multiple GPUs with Fully Sharded Data Parallelism

This article is divided into five parts; they are: • Introduction to Fully Sharded Data...