新闻

新闻

Stronger Normalization-Free Transformers

arXiv:2512.10938v1 Announce Type: cross Abstract: Although normalization layers have long been viewed as indispensable components...

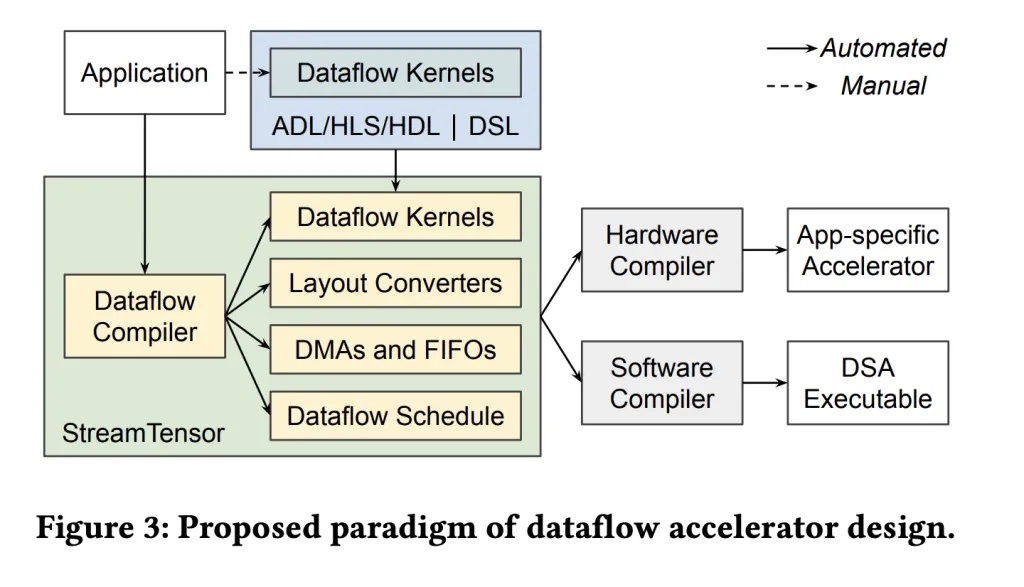

StreamTensor: A PyTorch-to-Accelerator Compiler that Streams LLM Intermediates Across FPGA Dataflows

Why treat LLM inference as batched kernels to DRAM when a dataflow compiler can pipe...

Streaming Sequence-to-Sequence Learning with Delayed Streams Modeling

arXiv:2509.08753v1 Announce Type: new Abstract: We introduce Delayed Streams Modeling (DSM), a flexible formulation for...

Stop guessing why your LLMs break: Anthropic’s new tool shows you exactly what goes wrong

Anthropic’s open-source circuit tracing tool can help developers debug, optimize, and control AI for reliable...

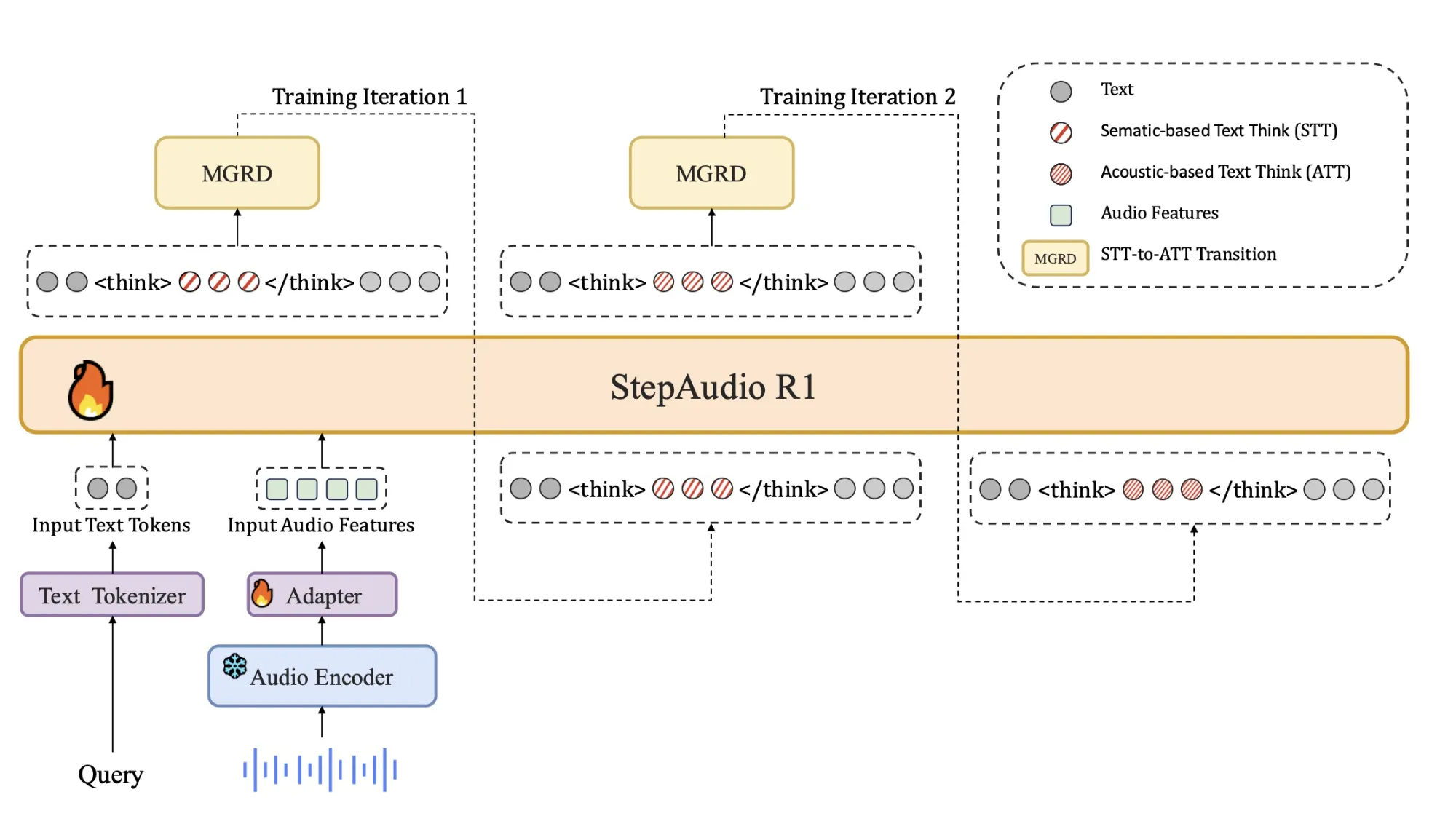

StepFun AI Releases Step-Audio-R1: A New Audio LLM that Finally Benefits from Test Time Compute Scaling

Why do current audio AI models often perform worse when they generate longer reasoning instead...

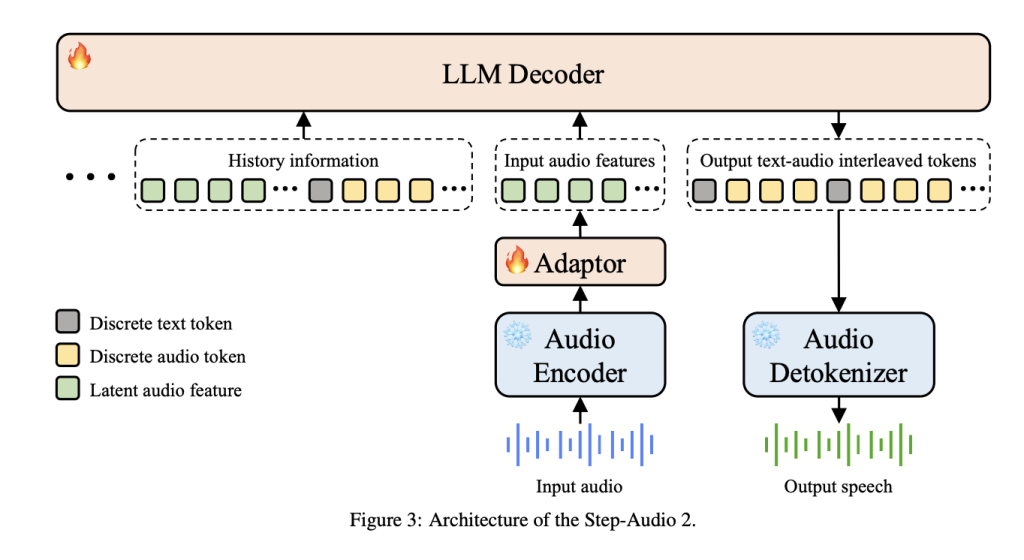

StepFun AI Releases Step-Audio 2 Mini: An Open-Source 8B Speech-to-Speech AI Model that Surpasses GPT-4o-Audio

The StepFun AI team has released Step-Audio 2 Mini, an 8B parameter speech-to-speech large audio...

STEPER: Step-wise Knowledge Distillation for Enhancing Reasoning Ability in Multi-Step Retrieval-Augmented Language Models

arXiv:2510.07923v1 Announce Type: new Abstract: Answering complex real-world questions requires step-by-step retrieval and integration of...

Step-level Verifier-guided Hybrid Test-Time Scaling for Large Language Models

arXiv:2507.15512v3 Announce Type: replace Abstract: Test-Time Scaling (TTS) is a promising approach to progressively elicit...

Steering Language Models with Weight Arithmetic

arXiv:2511.05408v1 Announce Type: new Abstract: Providing high-quality feedback to Large Language Models (LLMs) on a...

Start Making Sense(s): A Developmental Probe of Attention Specialization Using Lexical Ambiguity

arXiv:2511.21974v1 Announce Type: new Abstract: Despite an in-principle understanding of self-attention matrix operations in Transformer...

STAR-Bench: Probing Deep Spatio-Temporal Reasoning as Audio 4D Intelligence

arXiv:2510.24693v2 Announce Type: replace-cross Abstract: Despite rapid progress in Multi-modal Large Language Models and Large...

Stand Up for Research, Innovation, and Education

Right now, MIT alumni and friends are voicing their support for: America’s scientific and technological...