The Download: longevity myths, and sewer-cleaning robots

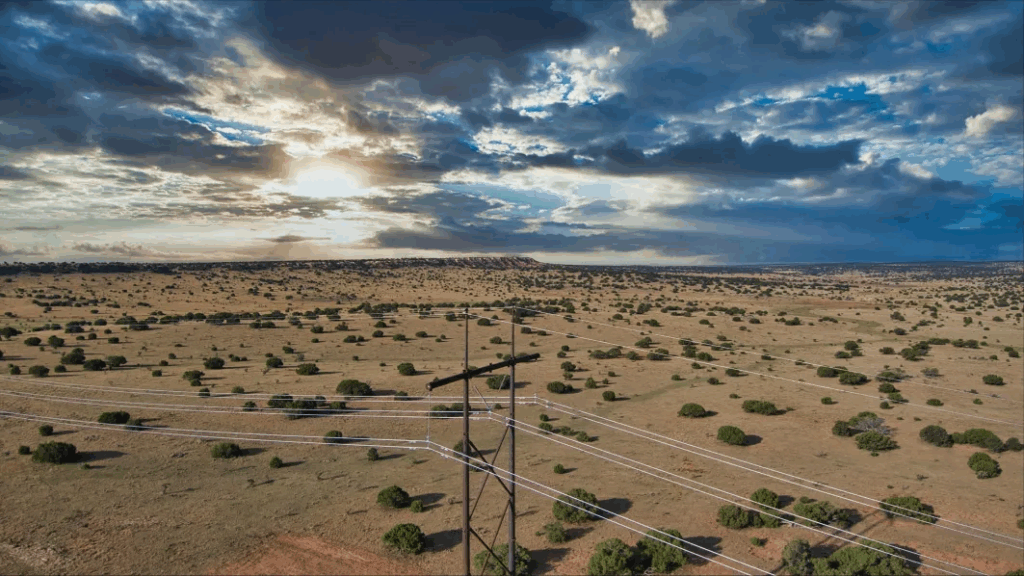

This is today’s edition of The Download, our weekday newsletter that provides a daily dose of what’s going on in the world of technology. Putin says organ transplants could grant immortality. Not quite. —Jessica Hamzelou Earlier this week, my editor forwarded me a video of the leaders of Russia and China talking about immortality. “These days at 70 years old you are still a child,” China’s Xi Jinping, 72, was translated as saying. “With the developments of biotechnology, human organs can be continuously transplanted, and people can live younger and younger, and even achieve immortality,” Russia’s Vladimir Putin, also 72, is reported to have replied. In reality, rounds of organ transplantation surgery aren’t likely to help anyone radically extend their lifespan anytime soon. And it’s a simplistic way to think about aging—a process so complicated that researchers can’t agree on what causes it, why it occurs, or even how to define it, let alone “treat” it. Read the full story. This article first appeared in The Checkup, MIT Technology Review’s weekly biotech newsletter. To receive it in your inbox every Thursday, and read articles like this first, sign up here. India is using robots to clean sewer pipes so humans no longer have to When Jitender was a child in New Delhi, both his parents worked as manual scavengers—a job that involved clearing the city’s sewers by hand. Now, he is among almost 200 contractors involved in the Delhi government’s effort to shift from this manual process to safer mechanical methods. Although it has been outlawed since 1993, manual scavenging—the practice of extracting human excreta from toilets, sewers, or septic tanks—is still practiced widely in India. And not only is the job undignified, but it can be extremely dangerous. Now, several companies have emerged to offer alternatives at a wide range of technical complexity. Read the full story. —Hamaad Habibullah This story is from our new print edition, which is all about the future of security. Subscribe here to catch future copies when they land. The must-reads I’ve combed the internet to find you today’s most fun/important/scary/fascinating stories about technology. 1 RFK Jr buried a major study linking alcohol and cancerClearly, the alcohol industry’s intense lobbying of the Trump administration is working. (Vox)+ RFK Jr repeated health untruths during a marathon Senate hearing yesterday. (Mother Jones)+ His anti-vaccine stance alarmed Democrats and Republicans alike. (The Atlantic $) 2 US tech giants want to embed AI in educationThey’re backing a vaguely worded initiative to that effect launched by Melania Trump. (Rolling Stone $)+ Tech leaders took it in turns to praise Trump during dinner. (WSJ $)+ Elon Musk was nowhere to be seen. (The Guardian)+ AI’s giants want to take over the classroom. (MIT Technology Review) 3 The FTC will probe AI companies over their impact on children In a bid to evaluate whether chatbots are harming their mental health. (WSJ $)+ An AI companion site is hosting sexually charged conversations with underage celebrity bots. (MIT Technology Review) 4 Podcasting giant Joe Rogan has been spreading climate misinformationHe’s grossly misinterpreted scientists’ research—and they’re exasperated. (The Guardian)+ Rogan claims the Earth’s temperature is plummeting. It isn’t. (Forbes)+ Why climate researchers are taking the temperature of mountain snow. (MIT Technology Review) 5 DeepSeek is working on its own advanced AI agentWatch out, OpenAI. (Bloomberg $) 6 OpenAI will start making its own AI chips next yearIn a bid to lessen its reliance on Nvidia. (FT $) 7 Warner Bros is suing MidjourneyThe AI startup used the likenesses of characters including Superman without permission, it alleges. (Bloomberg $)+ What comes next for AI copyright lawsuits? (MIT Technology Review) 8 Rivers and lakes are being used to cool down buildingsBut networks in Paris, Toronto, the US are facing a looming problem. (Wired $)+ The future of urban housing is energy-efficient refrigerators. (MIT Technology Review) 9 How high school reunions survive in the age of social mediaCuriosity is a powerful driving force, it seems. (The Atlantic $) 10 Facebook’s poke feature is back If I still used Facebook, I’d be thrilled. (TechCrunch) Quote of the day “Even if it doesn’t turn you into the alien if you eat this stuff, I guarantee you’ll grow an extra ear.” —Senator John Kennedy, a Republican from Louisiana, warns of dire consequences if Americans eat shrimp from countries other than the US, Gizmodo reports. One more thing Why one developer won’t quit fighting to connect the US’s gridsMichael Skelly hasn’t learned to take no for an answer. For much of the last 15 years, the energy entrepreneur has worked to develop long-haul transmission lines to carry wind power across the Great Plains, Midwest, and Southwest. But so far, he has little to show for the effort. Skelly has long argued that building such lines and linking together the nation’s grids would accelerate the shift from coal- and natural-gas-fueled power plants to the renewables needed to cut the pollution driving climate change. But his previous business shut down in 2019, after halting two of its projects and selling off interests in three more. Skelly contends he was early, not wrong. And he has a point: market and policymakers are increasingly coming around to his perspective. Read the full story. —James Temple We can still have nice things A place for comfort, fun and distraction to brighten up your day. (Got any ideas? Drop me a line or skeet ’em at me.) + The Paper, the new mockumentary from the makers of the American Office, looks interesting.+ Giorgio Armani was a true maestro of menswear.+ The phases of the moon are pretty fascinating + The Damien Hirst-directed video for Blur’s classic Country House has been given a 4K makeover.

The Download: longevity myths, and sewer-cleaning robots Read Post »