RA3: Mid-Training with Temporal Action Abstractions for Faster Reinforcement Learning (RL) Post-Training in Code LLMs

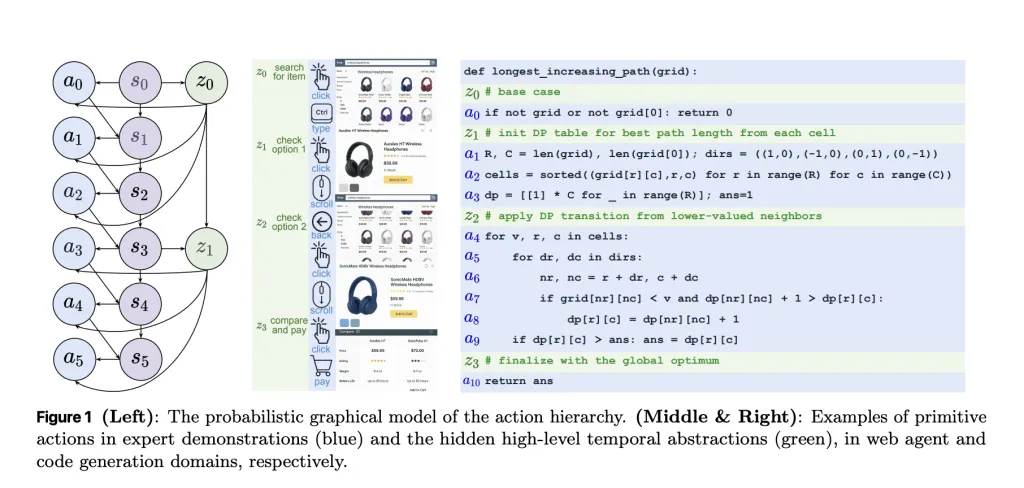

TL;DR: A new research from Apple, formalizes what “mid-training” should do before reinforcement learning RL post-training and introduces RA3 (Reasoning as Action Abstractions)—an EM-style procedure that learns temporally consistent latent actions from expert traces, then fine-tunes on those bootstrapped traces. It shows mid-training should (1) prune to a compact near-optimal action subspace and (2) shorten the effective planning horizon, improving RL convergence. Empirically, RA3 improves HumanEval/MBPP by ~8/4 points over base/NTP and accelerates RLVR on HumanEval+, MBPP+, LiveCodeBench, and Codeforces. What does the research present? The research team present the first formal treatment of how mid-training shapes post-training reinforcement learning RL: they breakdown outcomes into (i) pruning efficiency—how well mid-training selects a compact near-optimal action subset that shapes the initial policy prior—and (ii) RL convergence—how quickly post-training improves within that restricted set. The analysis argues mid-training is most effective when the decision space is compact and the effective horizon is short, favoring temporal abstractions over primitive next-token actions. https://arxiv.org/pdf/2509.25810 Algorithm: RA3 in one pass RA3 derives a sequential variational lower bound (a temporal ELBO) and optimizes it with an EM-like loop: E-step (latent discovery): use RL to infer temporally consistent latent structures (abstractions) aligned to expert sequences. M-step (model update): perform next-token prediction on the bootstrapped, latent-annotated traces to make those abstractions part of the model’s policy. Results: code generation and RLVR On Python code tasks, the research team reports that across multiple base models, RA3 improves average pass@k on HumanEval and MBPP by ~8 and ~4 points over the base model and an NTP mid-training baseline. In post-training, RLVR converges faster and to higher final performance on HumanEval+, MBPP+, LiveCodeBench, and Codeforces when initialized from RA3. These are mid- and post-training effects respectively; the evaluation scope is code generation. Key Takeaways The research team formalizes mid-training via two determinants—pruning efficiency and impact on RL convergence—arguing effectiveness rises when the decision space is compact and the effective horizon is short. RA3 optimizes a sequential variational lower bound by iteratively discovering temporally consistent latent structures with RL and then fine-tuning on bootstrapped traces (EM-style). On code generation, RA3 reports ~+8 (HumanEval) and ~+4 (MBPP) average pass@k gains over base/NTP mid-training baselines across several model scales. Initializing post-training with RA3 accelerates RLVR convergence and improves asymptotic performance on HumanEval+, MBPP+, LiveCodeBench, and Codeforces. Editorial Comments RA3’s contribution is concrete and narrow: it formalizes mid-training around two determinants—pruning efficiency and RL convergence—and operationalizes them via a temporal ELBO optimized in an EM loop to learn persistent action abstractions before RLVR. The researchers report ~+8 (HumanEval) and ~+4 (MBPP) average pass@k gains over base/NTP and faster RLVR convergence on HumanEval+, MBPP+, LiveCodeBench, and Codeforces. Check out the Technical Paper. Feel free to check out our GitHub Page for Tutorials, Codes and Notebooks. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well. The post RA3: Mid-Training with Temporal Action Abstractions for Faster Reinforcement Learning (RL) Post-Training in Code LLMs appeared first on MarkTechPost.