News

News

FinCoT: Grounding Chain-of-Thought in Expert Financial Reasoning

arXiv:2506.16123v2 Announce Type: replace Abstract: This paper presents FinCoT, a structured chain-of-thought (CoT) prompting framework...

FinBERT-QA: Financial Question Answering with pre-trained BERT Language Models

arXiv:2505.00725v1 Announce Type: new Abstract: Motivated by the emerging demand in the financial industry for...

FHSTP@EXIST 2025 Benchmark: Sexism Detection with Transparent Speech Concept Bottleneck Models

arXiv:2507.20924v1 Announce Type: new Abstract: Sexism has become widespread on social media and in online...

FELLE: Autoregressive Speech Synthesis with Token-Wise Coarse-to-Fine Flow Matching

arXiv:2502.11128v2 Announce Type: replace Abstract: To advance continuous-valued token modeling and temporal-coherence enforcement, we propose...

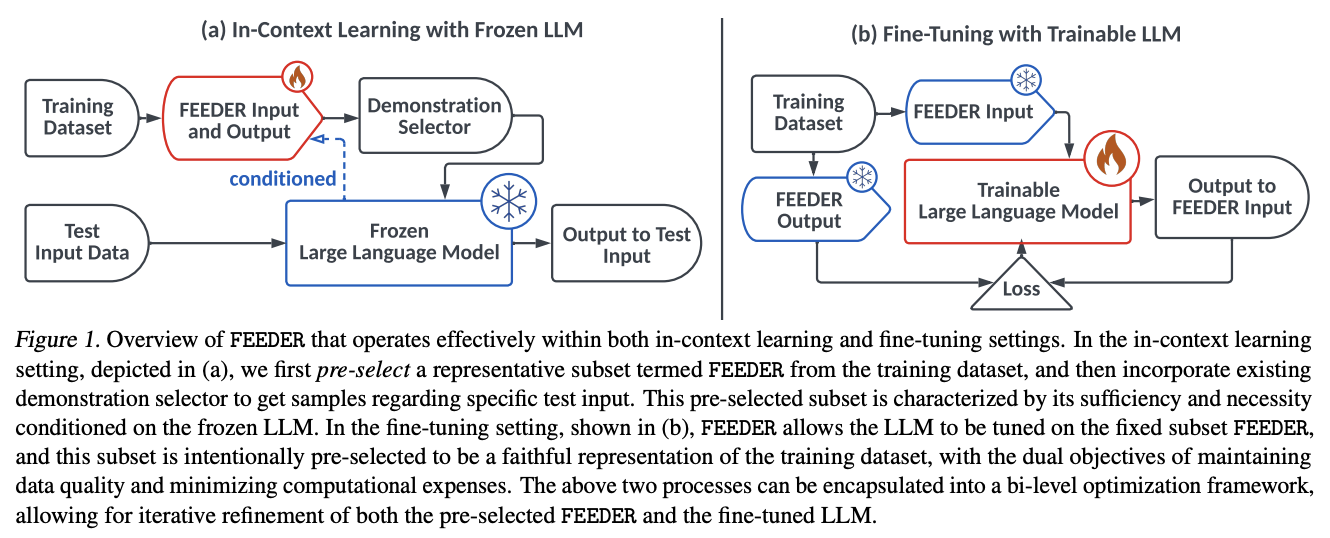

FEEDER: A Pre-Selection Framework for Efficient Demonstration Selection in LLMs

LLMs have demonstrated exceptional performance across multiple tasks by utilizing few-shot inference, also known as...

Feedback Indicators: The Alignment between Llama and a Teacher in Language Learning

arXiv:2508.11364v1 Announce Type: new Abstract: Automated feedback generation has the potential to enhance students’ learning...

Faster and Better LLMs via Latency-Aware Test-Time Scaling

arXiv:2505.19634v4 Announce Type: replace Abstract: Test-Time Scaling (TTS) has proven effective in improving the performance...

Fast, Slow, and Tool-augmented Thinking for LLMs: A Review

arXiv:2508.12265v1 Announce Type: new Abstract: Large Language Models (LLMs) have demonstrated remarkable progress in reasoning...

FalseReject: A Resource for Improving Contextual Safety and Mitigating Over-Refusals in LLMs via Structured Reasoning

arXiv:2505.08054v1 Announce Type: new Abstract: Safety alignment approaches in large language models (LLMs) often lead...

False Sense of Security: Why Probing-based Malicious Input Detection Fails to Generalize

arXiv:2509.03888v1 Announce Type: new Abstract: Large Language Models (LLMs) can comply with harmful instructions, raising...

Fair-GPTQ: Bias-Aware Quantization for Large Language Models

arXiv:2509.15206v1 Announce Type: new Abstract: High memory demands of generative language models have drawn attention...

Failure by Interference: Language Models Make Balanced Parentheses Errors When Faulty Mechanisms Overshadow Sound Ones

arXiv:2507.00322v1 Announce Type: new Abstract: Despite remarkable advances in coding capabilities, language models (LMs) still...