Noticias

Noticias

Probing the Critical Point (CritPt) of AI Reasoning: a Frontier Physics Research Benchmark

arXiv:2509.26574v3 Announce Type: replace-cross Abstract: While large language models (LLMs) with reasoning capabilities are progressing...

Probing Neural Topology of Large Language Models

arXiv:2506.01042v3 Announce Type: replace Abstract: Probing large language models (LLMs) has yielded valuable insights into...

Probabilistic Aggregation and Targeted Embedding Optimization for Collective Moral Reasoning in Large Language Models

arXiv:2506.14625v2 Announce Type: replace Abstract: Large Language Models (LLMs) have shown impressive moral reasoning abilities...

Proactive Defense: Compound AI for Detecting Persuasion Attacks and Measuring Inoculation Effectiveness

arXiv:2511.21749v1 Announce Type: new Abstract: This paper introduces BRIES, a novel compound AI architecture designed...

PRISM: Prompt-Refined In-Context System Modelling for Financial Retrieval

arXiv:2511.14130v1 Announce Type: cross Abstract: With the rapid progress of large language models (LLMs), financial...

Prioritizing Image-Related Tokens Enhances Vision-Language Pre-Training

arXiv:2505.08971v1 Announce Type: cross Abstract: In standard large vision-language models (LVLMs) pre-training, the model typically...

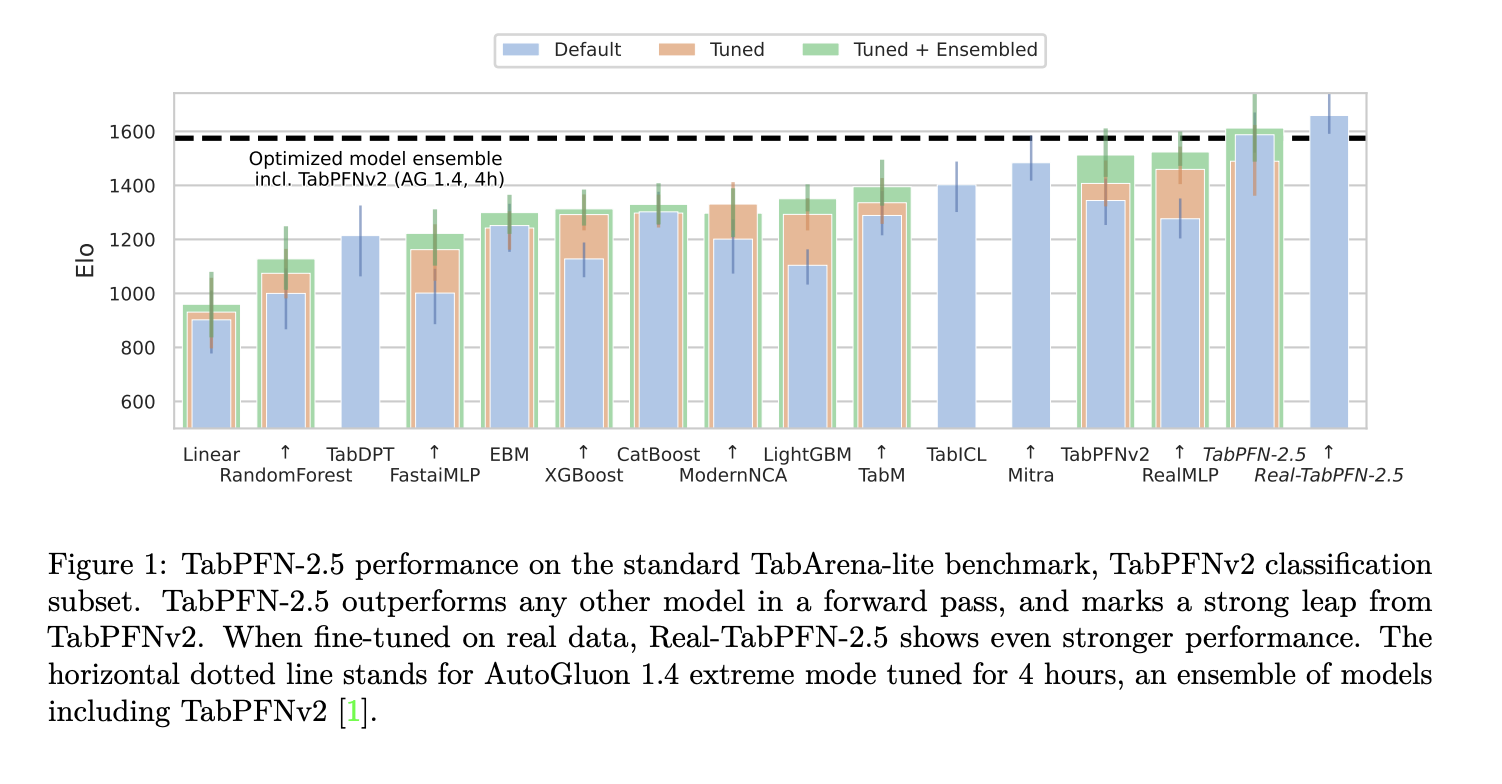

Prior Labs Releases TabPFN-2.5: The Latest Version of TabPFN that Unlocks Scale and Speed for Tabular Foundation Models

Tabular data is still where many important models run in production. Finance, healthcare, energy and...

Pretrain a BERT Model from Scratch

This article is divided into three parts; they are: • Creating a BERT Model the...

Preparing Data for BERT Training

This article is divided into four parts; they are: • Preparing Documents • Creating Sentence...

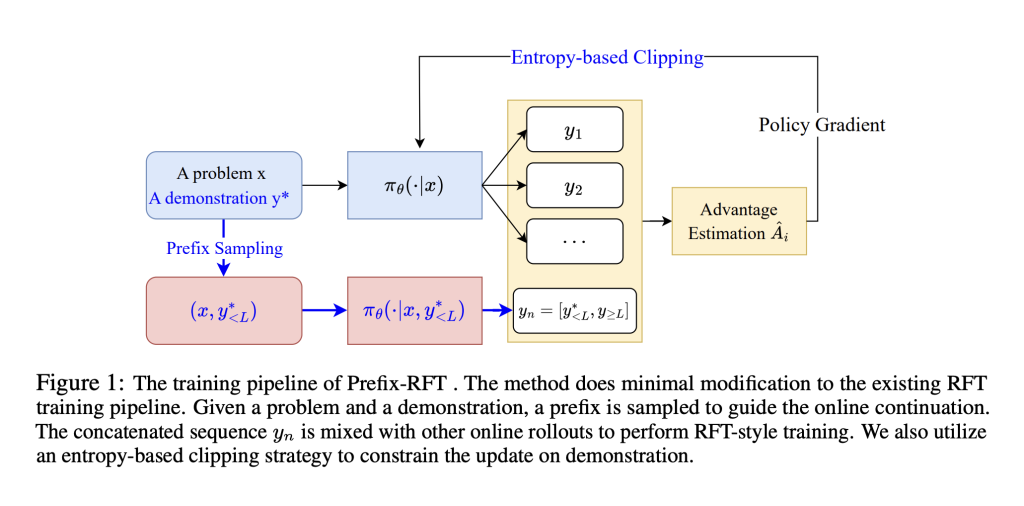

Prefix-RFT: A Unified Machine Learning Framework to blend Supervised Fine-Tuning (SFT) and Reinforcement Fine-Tuning (RFT)

Large language models are typically refined after pretraining using either supervised fine-tuning (SFT) or reinforcement...

Predicting the Performance of Black-box LLMs through Self-Queries

arXiv:2501.01558v3 Announce Type: replace-cross Abstract: As large language models (LLMs) are increasingly relied on in...

Precise Attribute Intensity Control in Large Language Models via Targeted Representation Editing

arXiv:2510.12121v1 Announce Type: cross Abstract: Precise attribute intensity control–generating Large Language Model (LLM) outputs with...