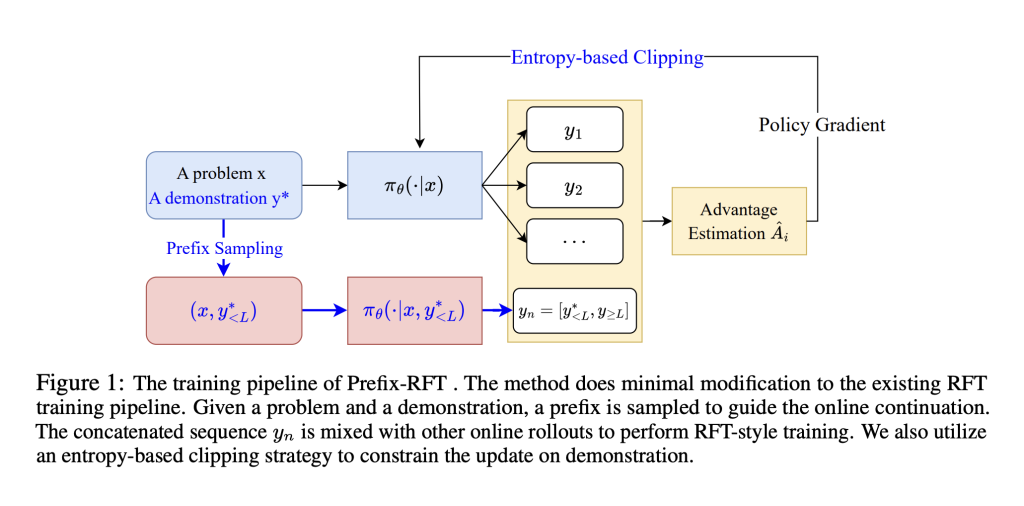

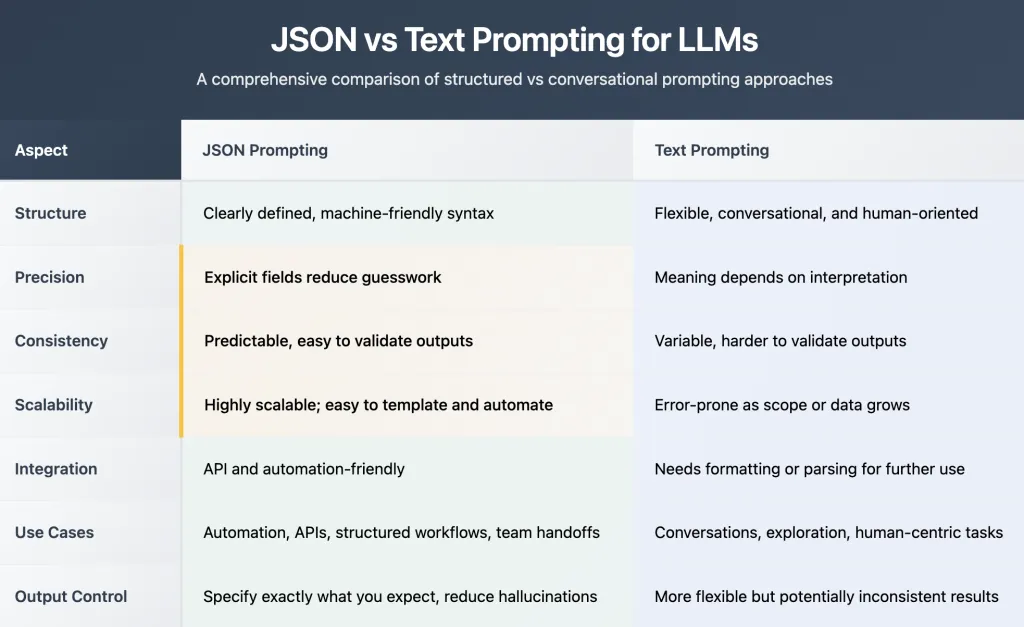

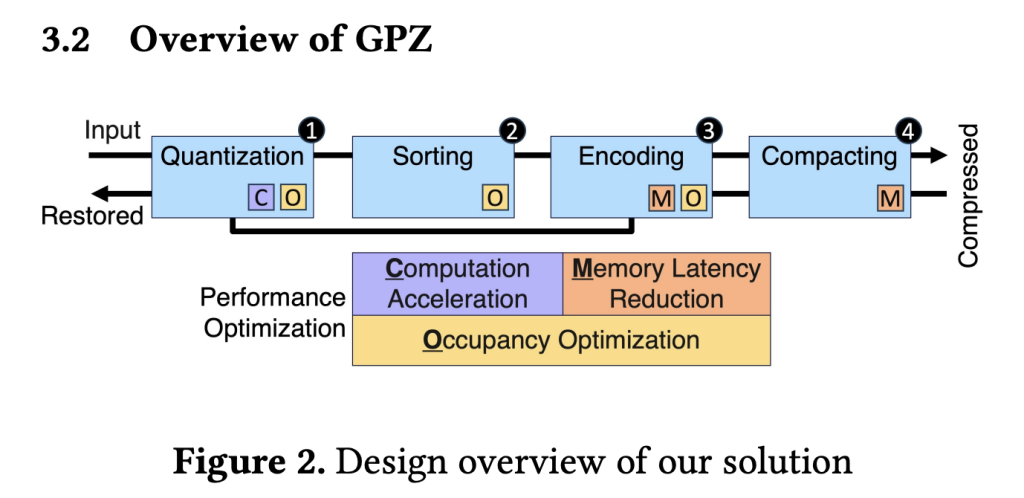

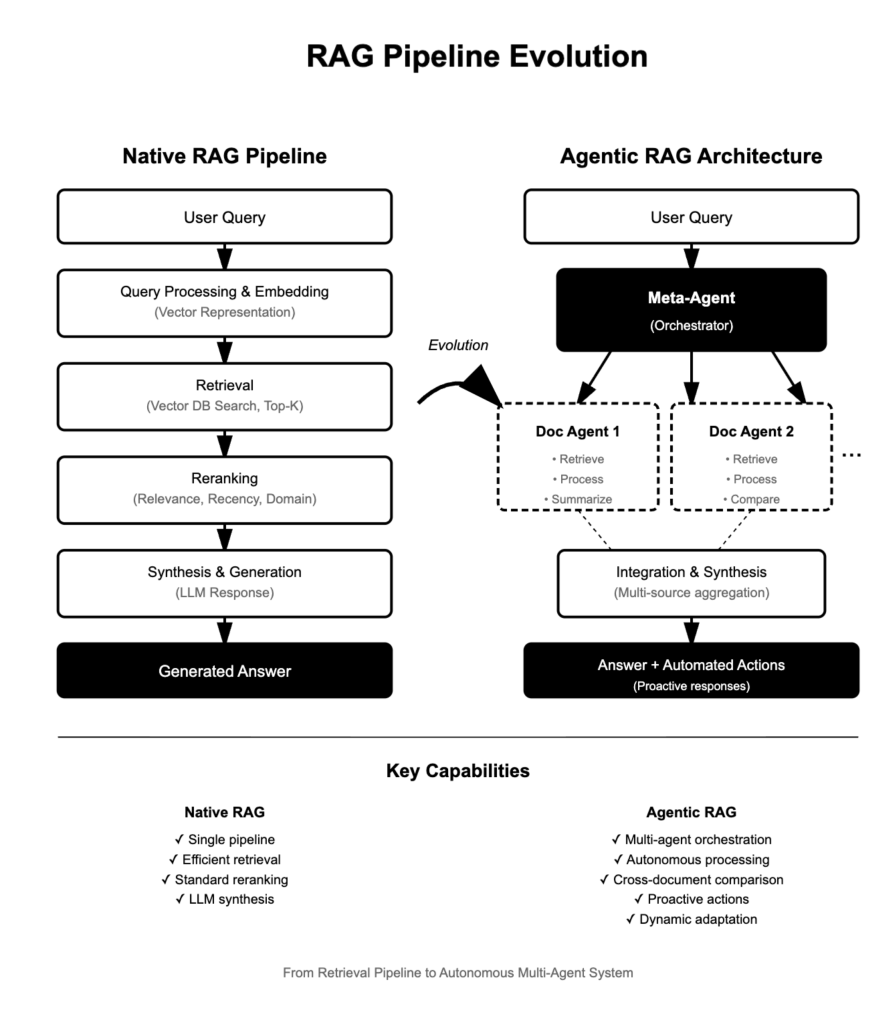

Table of contents 1. Regulatory and Risk Posture 2. Capability vs. Cost, Latency, and Footprint 3. Security and Compliance Trade-offs 4. Deployment Patterns 5. Decision Matrix (Quick Reference) 6. Concrete Use-Cases 7. Performance/Cost Levers Before “Going Bigger” EXAMPLES No single solution universally wins between Large Language Models (LLMs, ≥30B parameters, often via APIs) and Small Language Models (SLMs, ~1–15B, typically open-weights or proprietary specialist models). For banks, insurers, and asset managers in 2025, your selection should be governed by regulatory risk, data sensitivity, latency and cost requirements, and the complexity of the use case. SLM-first is recommended for structured information extraction, customer service, coding assistance, and internal knowledge tasks, especially with retrieval-augmented generation (RAG) and strong guardrails. Escalate to LLMs for heavy synthesis, multi-step reasoning, or when SLMs cannot meet your performance bar within latency/cost envelope. Governance is mandatory for both: treat LLMs and SLMs under your model risk management framework (MRM), align to NIST AI RMF, and map high-risk applications (such as credit scoring) to obligations under the EU AI Act. 1. Regulatory and Risk Posture Financial services are subject to mature model governance standards. In the US, Federal Reserve/OCC/FDIC SR 11-7 covers any model used for business decisioning, including LLMs and SLMs. This means required validation, monitoring, and documentation—irrespective of model size. The NIST AI Risk Management Framework (AI RMF 1.0) is the gold standard for AI risk controls, now widely adopted by financial institutions for both traditional and generative AI risks. In the EU, the AI Act is in force, with staged compliance dates (Aug 2025 for general purpose models, Aug 2026 for high-risk systems such as credit scoring per Annex III). High-risk means pre-market conformity, risk management, documentation, logging, and human oversight. Institutions targeting the EU must align remediation timelines accordingly. Core sectoral data rules apply: GLBA Safeguards Rule: Security controls and vendor oversight for consumer financial data. PCI DSS v4.0: New cardholder data controls—mandatory from March 31, 2025, with upgraded authentication, retention, and encryption. Supervisors (FSB/BIS/ECB) and standard setters highlight systemic risk from concentration, vendor lock-in, and model risk—neutral to model size. Key point: High-risk uses (credit, underwriting) require tight controls regardless of parameters. Both SLMs and LLMs demand traceable validation, privacy assurance, and sector compliance. 2. Capability vs. Cost, Latency, and Footprint SLMs (3–15B) now deliver strong accuracy on domain workloads, especially after fine-tuning and with retrieval augmentation. Recent SLMs (e.g., Phi-3, FinBERT, COiN) excel at targeted extraction, classification, and workflow augmentation, cut latency (<50ms), and allow self-hosting for strict data residency—and are feasible for edge deployment. LLMs unlock cross-document synthesis, heterogeneous data reasoning, and long-context operations (>100K tokens). Domain-specialized LLMs (e.g., BloombergGPT, 50B) outperform general models on financial benchmarks and multi-step reasoning tasks. Compute economics: Transformer self-attention scales quadratically with sequence length. FlashAttention/SlimAttention optimizations reduce compute costs, but don’t defeat the quadratic lower bound; long-context LLMs can be exponentially costlier at inference than short-context SLMs. Key point: Short, structured, latency-sensitive tasks (contact center, claims, KYC extraction, knowledge search) fit SLMs. If you need 100K+ token contexts or deep synthesis, budget for LLMs and mitigate cost via caching and selective “escalation.” 3. Security and Compliance Trade-offs Common risks: Both model types are exposed to prompt injection, insecure output handling, data leakage, and supply chain risks. SLMs: Preferred for self-hosting—satisfying GLBA/PCI/data sovereignty concerns and minimizing legal risks from cross-border transfers. LLMs: APIs introduce concentration and lock-in risks; supervisors require documented exit, fallback, and multi-vendor strategies. Explainability: High-risk uses require transparent features, challenger models, full decision logs, and human oversight; LLM reasoning traces cannot substitute for formal validation required by SR 11-7 / EU AI Act. 4. Deployment Patterns Three proven modes in finance: SLM-first, LLM fallback: Route 80%+ queries to a tuned SLM with RAG; escalate low-confidence/long-context cases to an LLM. Predictable cost/latency; good for call centers, operations, and form parsing. LLM-primary with tool-use: LLM as orchestrator for synthesis, with deterministic tools for data access, calculations, and protected by DLP. Suited for complex research, policy/regulatory work. Domain-specialized LLM: Large models adapted to financial corpora; higher MRM burden but measurable gains for niche tasks. Regardless, always implement content filters, PII redaction, least-privilege connectors, output verification, red-teaming, and continuous monitoring under NIST AI RMF and OWASP guidance. 5. Decision Matrix (Quick Reference) Criterion Prefer SLM Prefer LLM Regulatory exposure Internal assist, non-decisioning High-risk use (credit scoring) w/ full validation Data sensitivity On-prem/VPC, PCI/GLBA constraints External API with DLP, encryption, DPAs Latency & cost Sub-second, high QPS, cost-sensitive Seconds-latency, batch, low QPS Complexity Extraction, routing, RAG-aided draft Synthesis, ambiguous input, long-form context Engineering ops Self-hosted, CUDA, integration Managed API, vendor risk, rapid deployment 6. Concrete Use-Cases Customer Service: SLM-first with RAG/tools for common issues, LLM escalation for complex multi-policy queries. KYC/AML & Adverse Media: SLMs suffice for extraction/normalization; escalate to LLMs for fraud or multilingual synthesis. Credit Underwriting: High-risk (EU AI Act Annex III); use SLM/classical ML for decisioning, LLMs for explanatory narratives, always with human review. Research/Portfolio Notes: LLMs enable draft synthesis and cross-source collation; read-only access, citation logging, tool verification recommended. Developer Productivity: On-prem SLM code assistants for speed/IP safety; LLM escalation for refactoring or complex synthesis. 7. Performance/Cost Levers Before “Going Bigger” RAG optimization: Most failures are retrieval, not “model IQ.” Improve chunking, recency, relevance ranking before increasing size. Prompt/IO controls: Guardrails for input/output schema, anti-prompt-injection per OWASP. Serve-time: Quantize SLMs, page KV cache, batch/stream, cache frequent answers; quadratic attention inflates indiscriminate long contexts. Selective escalation: Route by confidence; >70% cost saving possible. Domain adaptation: Lightweight tuning/LoRA on SLMs closes most gaps; use large models only for clear, measurable lift in performance. EXAMPLES Example 1: Contract Intelligence at JPMorgan (COiN) JPMorgan Chase deployed a specialized Small Language Model (SLM), called COiN, to automate the review of commercial loan agreements—a process traditionally handled manually by legal staff. By training COiN on thousands of legal documents and regulatory filings, the bank slashed contract review times from several weeks to mere hours, achieving high accuracy and compliance traceability while drastically reducing operational cost. This targeted SLM solution