ข่าว

ข่าว

Improving the Robustness of Distantly-Supervised Named Entity Recognition via Uncertainty-Aware Teacher Learning and Student-Student Collaborative Learning

arXiv:2311.08010v3 Announce Type: replace Abstract: Distantly-Supervised Named Entity Recognition (DS-NER) is widely used in real-world...

Improving Social Determinants of Health Documentation in French EHRs Using Large Language Models

arXiv:2507.03433v1 Announce Type: new Abstract: Social determinants of health (SDoH) significantly influence health outcomes, shaping...

Improving Detection of Watermarked Language Models

arXiv:2508.13131v1 Announce Type: new Abstract: Watermarking has recently emerged as an effective strategy for detecting...

Improved Personalized Headline Generation via Denoising Fake Interests from Implicit Feedback

arXiv:2508.07178v1 Announce Type: new Abstract: Accurate personalized headline generation hinges on precisely capturing user interests...

Implementing DeepSpeed for Scalable Transformers: Advanced Training with Gradient Checkpointing and Parallelism

In this advanced DeepSpeed tutorial, we provide a hands-on walkthrough of cutting-edge optimization techniques for...

Impact of Stickers on Multimodal Sentiment and Intent in Social Media: A New Task, Dataset and Baseline

arXiv:2405.08427v2 Announce Type: replace Abstract: Stickers are increasingly used in social media to express sentiment...

IFEvalCode: Controlled Code Generation

arXiv:2507.22462v2 Announce Type: replace Abstract: Code large language models (Code LLMs) have made significant progress...

IFDECORATOR: Wrapping Instruction Following Reinforcement Learning with Verifiable Rewards

arXiv:2508.04632v1 Announce Type: new Abstract: Reinforcement Learning with Verifiable Rewards (RLVR) improves instruction following capabilities...

If you are a free user of ChatGPT, you can now use its new image generator 4o (again)

OpenAI has opened the floodgates after limiting the use of its new tool due to...

Identifying and Answering Questions with False Assumptions: An Interpretable Approach

arXiv:2508.15139v1 Announce Type: new Abstract: People often ask questions with false assumptions, a type of...

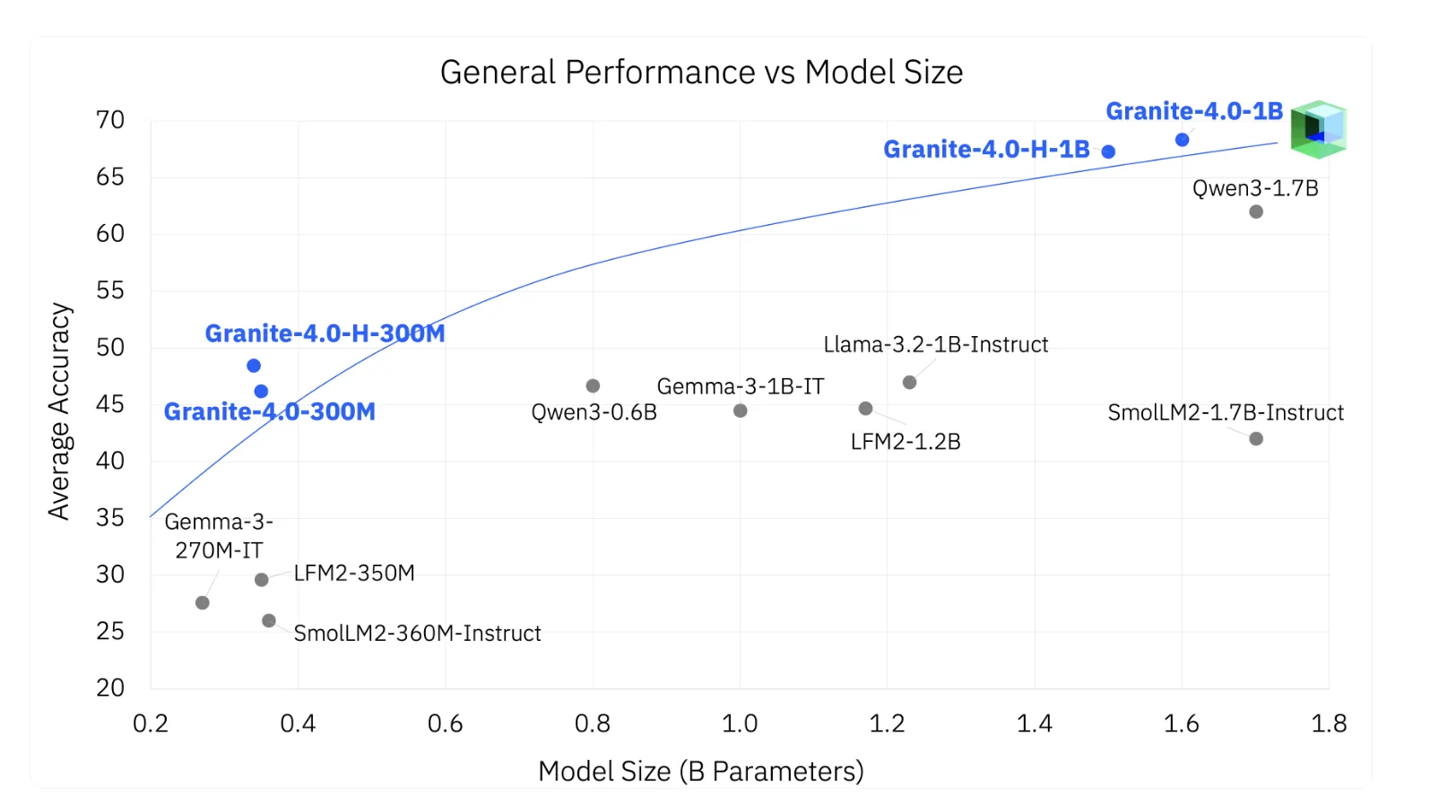

IBM AI Team Releases Granite 4.0 Nano Series: Compact and Open-Source Small Models Built for AI at the Edge

Small models are often blocked by poor instruction tuning, weak tool use formats, and missing...

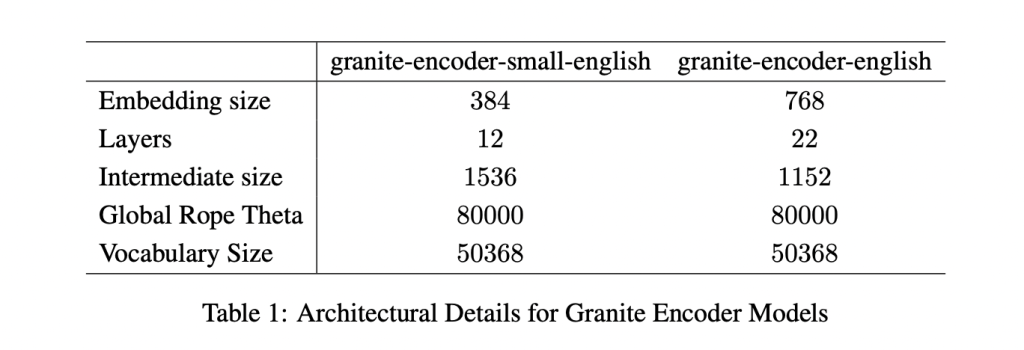

IBM AI Research Releases Two English Granite Embedding Models, Both Based on the ModernBERT Architecture

IBM has quietly built a strong presence in the open-source AI ecosystem, and its latest...