ニュース

ニュース

Phonely’s new AI agents hit 99% accuracy—and customers can’t tell they’re not human

Phonely, Maitai and Groq achieve breakthrough in AI phone support with sub-second response times and...

Phantom: General Backdoor Attacks on Retrieval Augmented Language Generation

arXiv:2405.20485v3 Announce Type: replace-cross Abstract: Retrieval Augmented Generation (RAG) expands the capabilities of modern large...

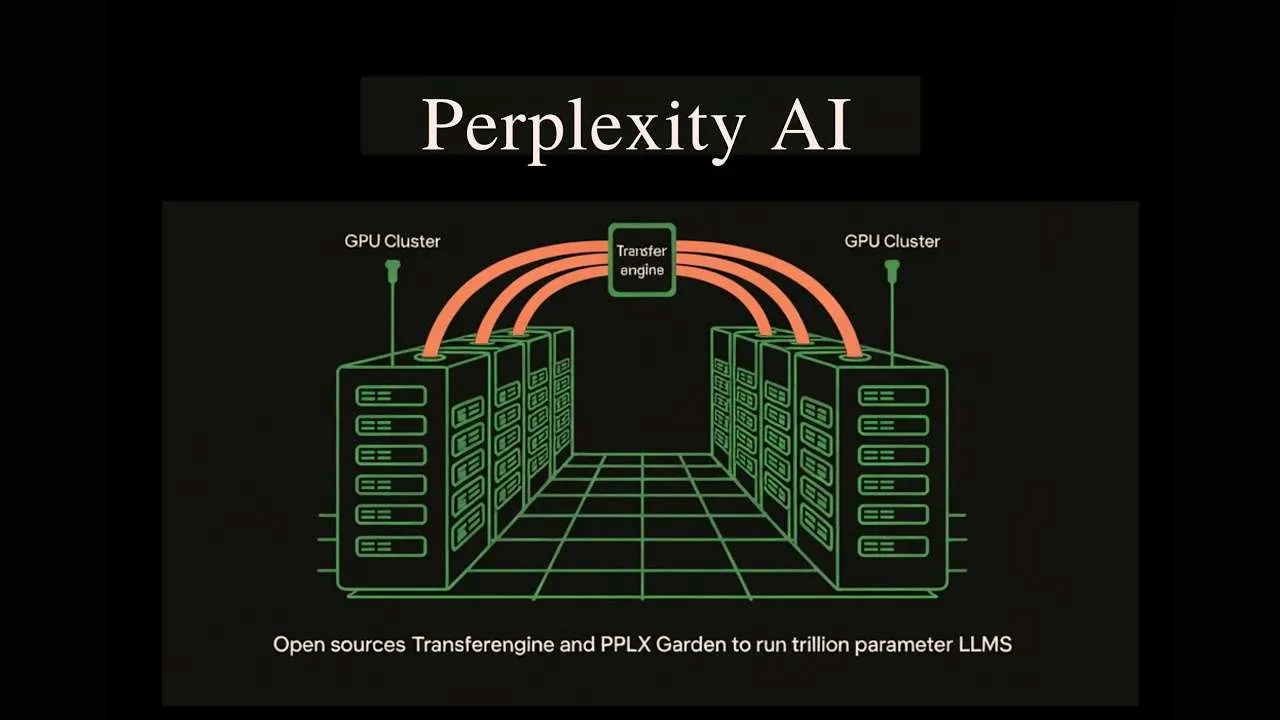

Perplexity AI Releases TransferEngine and pplx garden to Run Trillion Parameter LLMs on Existing GPU Clusters

How can teams run trillion parameter language models on existing mixed GPU clusters without costly...

PERM: Psychology-grounded Empathetic Reward Modeling for Large Language Models

arXiv:2601.10532v2 Announce Type: replace Abstract: Large Language Models (LLMs) are increasingly deployed in human-centric applications...

PerCoR: Evaluating Commonsense Reasoning in Persian via Multiple-Choice Sentence Completion

arXiv:2510.22616v1 Announce Type: new Abstract: We introduced PerCoR (Persian Commonsense Reasoning), the first large-scale Persian...

Pensieve Grader: An AI-Powered, Ready-to-Use Platform for Effortless Handwritten STEM Grading

arXiv:2507.01431v1 Announce Type: cross Abstract: Grading handwritten, open-ended responses remains a major bottleneck in large...

PAT: Accelerating LLM Decoding via Prefix-Aware Attention with Resource Efficient Multi-Tile Kernel

arXiv:2511.22333v2 Announce Type: replace-cross Abstract: LLM serving is increasingly dominated by decode attention, which is...

Partitioner Guided Modal Learning Framework

arXiv:2507.11661v1 Announce Type: new Abstract: Multimodal learning benefits from multiple modal information, and each learned...

Partial Information Decomposition via Normalizing Flows in Latent Gaussian Distributions

arXiv:2510.04417v1 Announce Type: cross Abstract: The study of multimodality has garnered significant interest in fields...

Pardon? Evaluating Conversational Repair in Large Audio-Language Models

arXiv:2601.12973v1 Announce Type: new Abstract: Large Audio-Language Models (LALMs) have demonstrated strong performance in spoken...

Paper2Agent: Reimagining Research Papers As Interactive and Reliable AI Agents

arXiv:2509.06917v2 Announce Type: replace-cross Abstract: We introduce Paper2Agent, an automated framework that converts research papers...

PAG: Multi-Turn Reinforced LLM Self-Correction with Policy as Generative Verifier

arXiv:2506.10406v1 Announce Type: new Abstract: Large Language Models (LLMs) have demonstrated impressive capabilities in complex...