ニュース

ニュース

Shrink exploit windows, slash MTTP: Why ring deployment is now a must for enterprise defense

Ring deployment slashes MTTP and legacy CVE risk. Learn how Ivanti and Southstar Bank are...

ShareChat: A Dataset of Chatbot Conversations in the Wild

arXiv:2512.17843v2 Announce Type: replace Abstract: While academic research typically treats Large Language Models (LLM) as...

SGPO: Self-Generated Preference Optimization based on Self-Improver

arXiv:2507.20181v1 Announce Type: new Abstract: Large language models (LLMs), despite their extensive pretraining on diverse...

SFT Doesn’t Always Hurt General Capabilities: Revisiting Domain-Specific Fine-Tuning in LLMs

arXiv:2509.20758v3 Announce Type: replace Abstract: Supervised Fine-Tuning (SFT) on domain-specific datasets is a common approach...

SessionIntentBench: A Multi-task Inter-session Intention-shift Modeling Benchmark for E-commerce Customer Behavior Understanding

arXiv:2507.20185v1 Announce Type: new Abstract: Session history is a common way of recording user interacting...

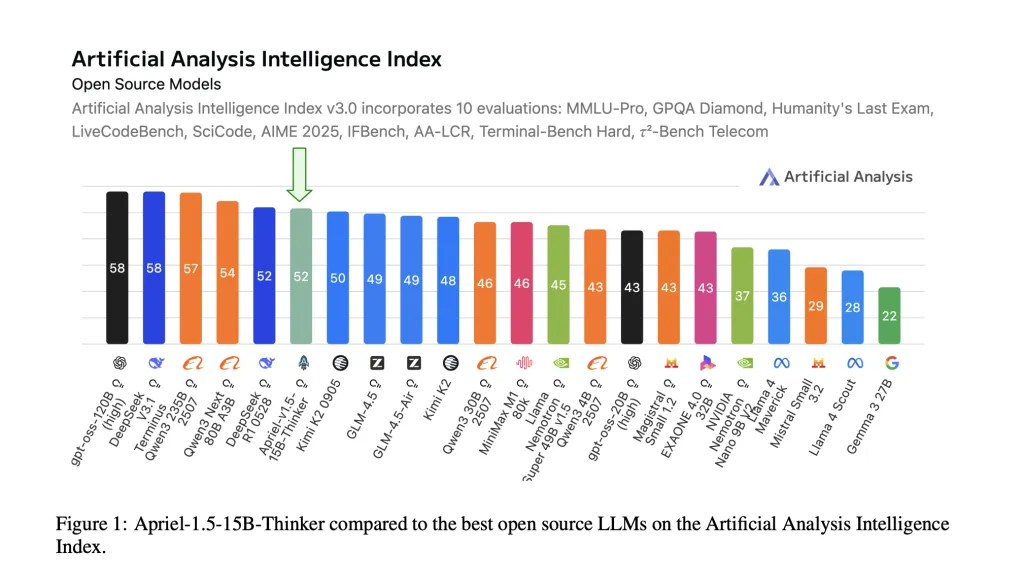

ServiceNow AI Releases Apriel-1.5-15B-Thinker: An Open-Weights Multimodal Reasoning Model that Hits Frontier-Level Performance on a Single-GPU Budget

ServiceNow AI Research Lab has released Apriel-1.5-15B-Thinker, a 15-billion-parameter open-weights multimodal reasoning model trained with...

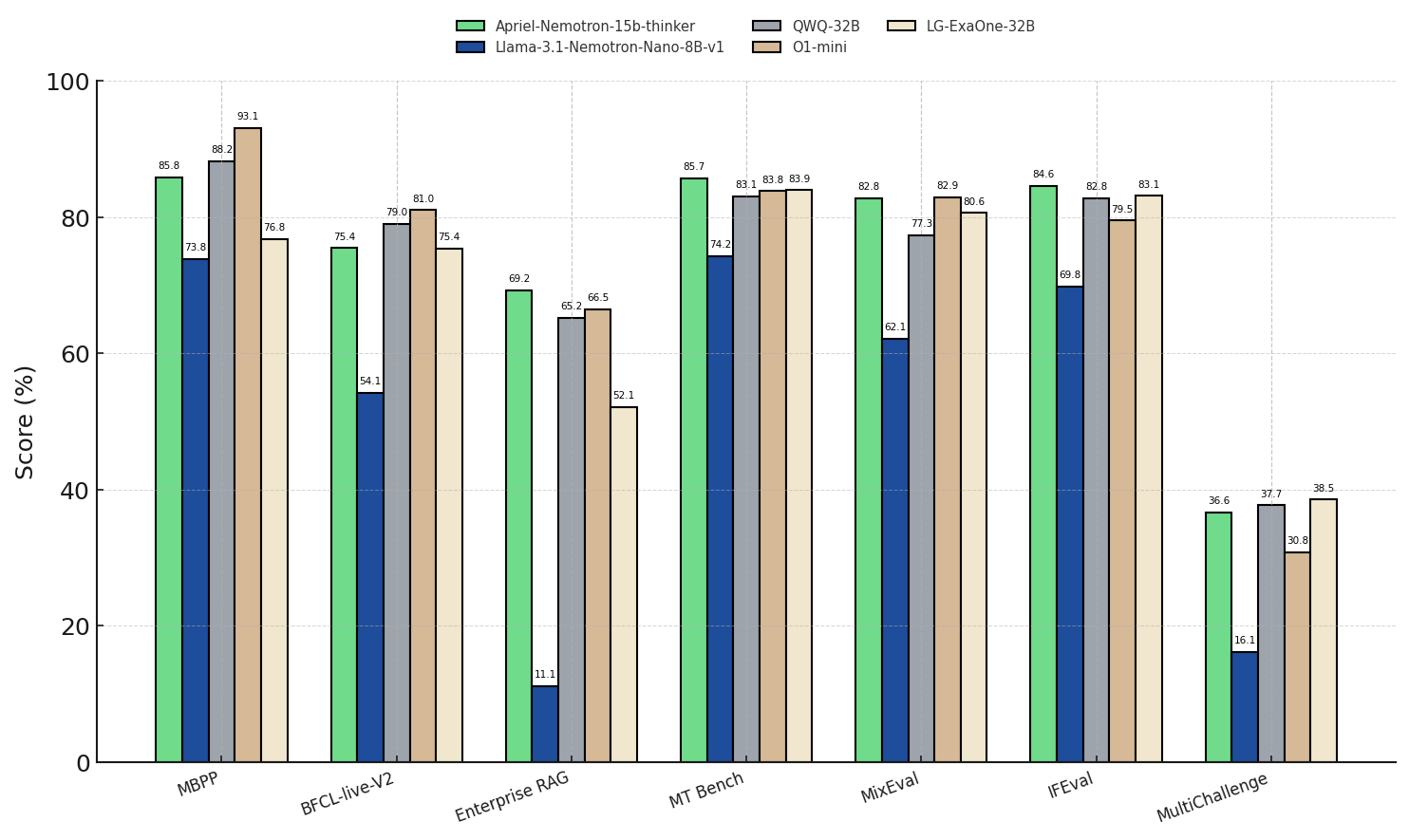

ServiceNow AI Released Apriel-Nemotron-15b-Thinker: A Compact Yet Powerful Reasoning Model Optimized for Enterprise-Scale Deployment and Efficiency

AI models today are expected to handle complex tasks such as solving mathematical problems, interpreting...

Sequence graphs realizations and ambiguity in language models

arXiv:2402.08830v2 Announce Type: replace-cross Abstract: Several popular language models represent local contexts in an input...

Sentiment-Aware Recommendation Systems in E-Commerce: A Review from a Natural Language Processing Perspective

arXiv:2505.03828v1 Announce Type: cross Abstract: E-commerce platforms generate vast volumes of user feedback, such as...

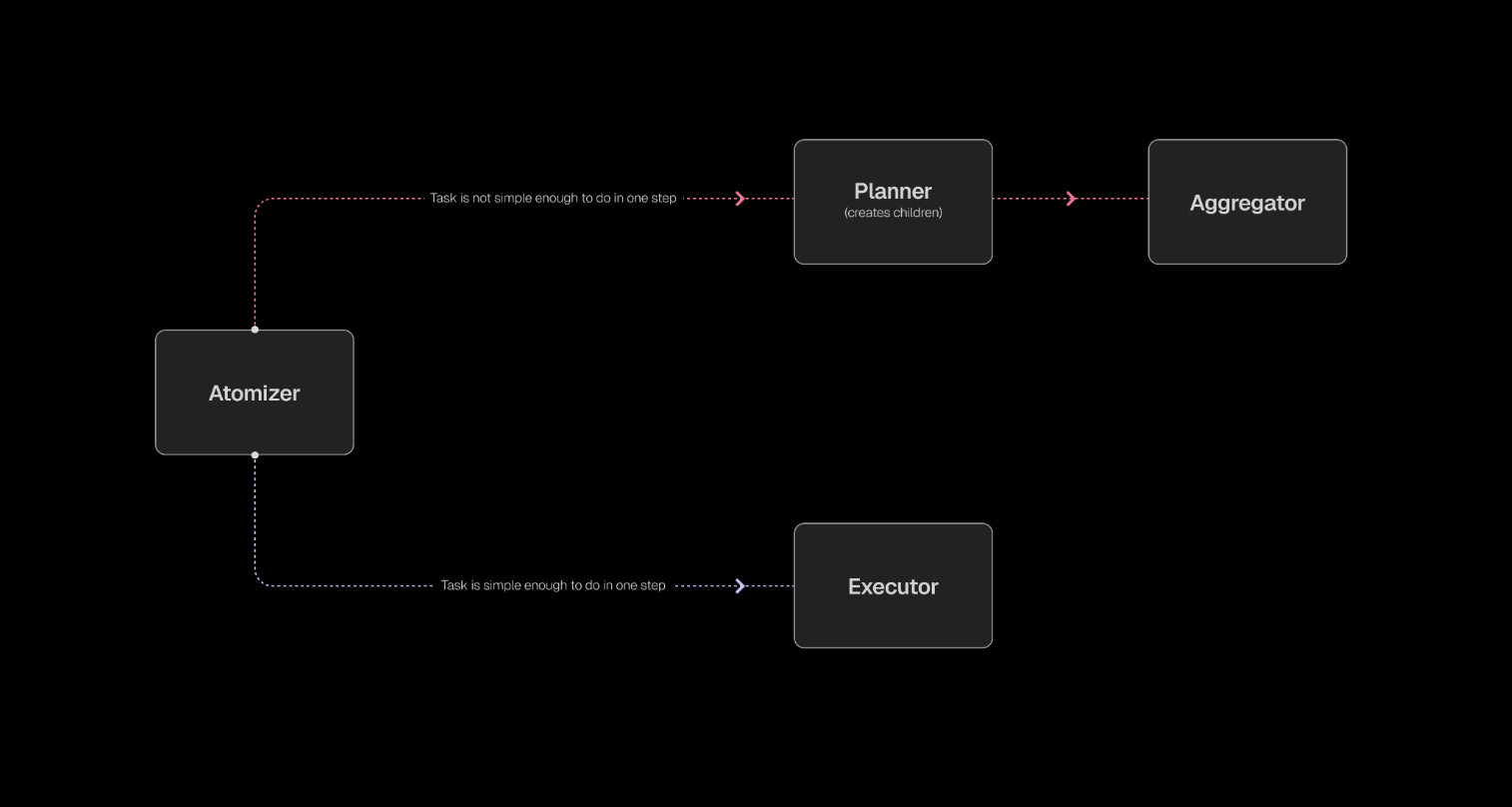

Sentient AI Releases ROMA: An Open-Source and AGI Focused Meta-Agent Framework for Building AI Agents with Hierarchical Task Execution

Sentient AI has released ROMA (Recursive Open Meta-Agent), an open-source meta-agent framework for building high-performance...

Semi-Supervised Learning for Large Language Models Safety and Content Moderation

arXiv:2512.21107v1 Announce Type: new Abstract: Safety for Large Language Models (LLMs) has been an ongoing...

Semantic Reconstruction of Adversarial Plagiarism: A Context-Aware Framework for Detecting and Restoring “Tortured Phrases” in Scientific Literature

arXiv:2512.10435v1 Announce Type: new Abstract: The integrity and reliability of scientific literature is facing a...