Notizie

Notizie

Tencent Open Sources Hunyuan-A13B: A 13B Active Parameter MoE Model with Dual-Mode Reasoning and 256K Context

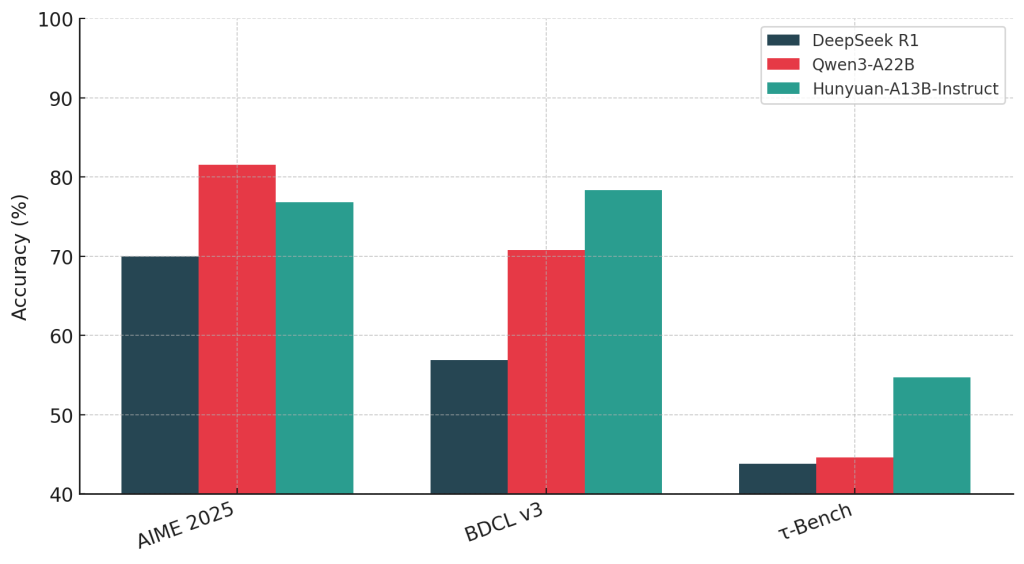

Tencent’s Hunyuan team has introduced Hunyuan-A13B, a new open-source large language model built on a...

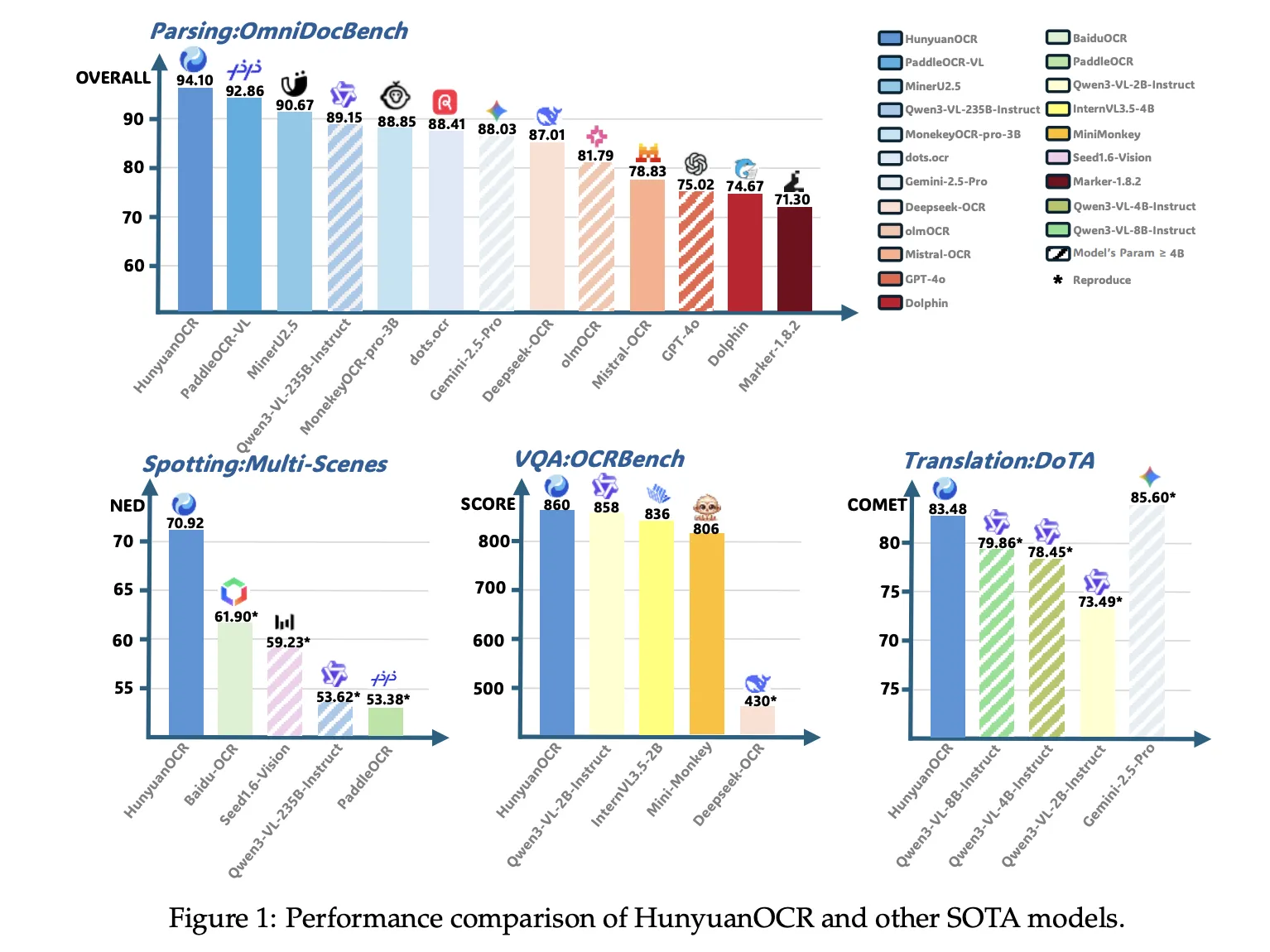

Tencent Hunyuan Releases HunyuanOCR: a 1B Parameter End to End OCR Expert VLM

Tencent Hunyuan has released HunyuanOCR, a 1B parameter vision language model that is specialized for...

Tencent Hunyuan Releases HPC-Ops: A High Performance LLM Inference Operator Library

Tencent Hunyuan has open sourced HPC-Ops, a production grade operator library for large language model...

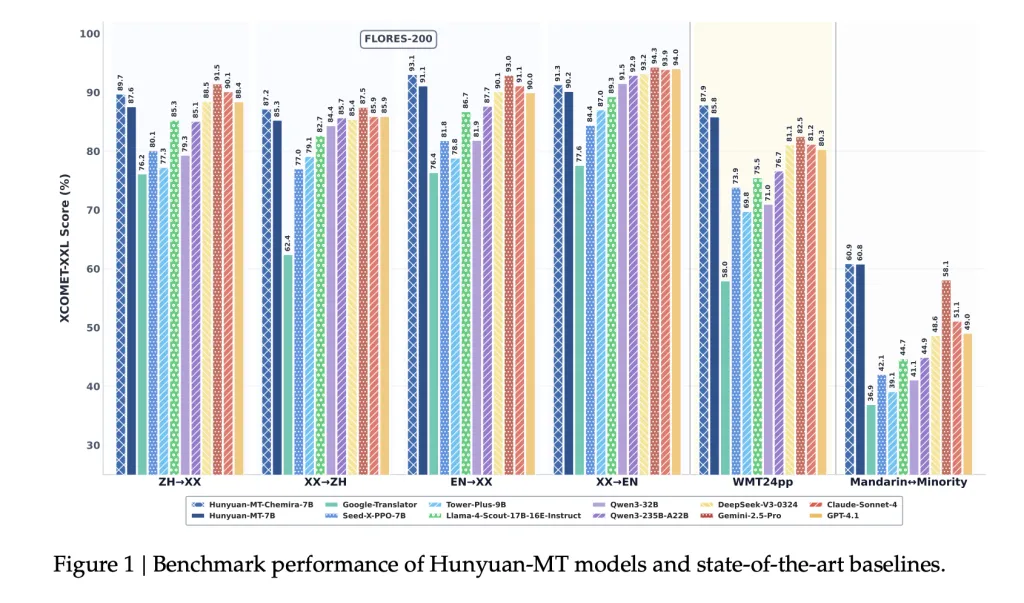

Tencent Hunyuan Open-Sources Hunyuan-MT-7B and Hunyuan-MT-Chimera-7B: A State-of-the-Art Multilingual Translation Models

Introduction Tencent’s Hunyuan team has released Hunyuan-MT-7B (a translation model) and Hunyuan-MT-Chimera-7B (an ensemble model)...

TEN: Table Explicitization, Neurosymbolically

arXiv:2508.09324v1 Announce Type: new Abstract: We present a neurosymbolic approach, TEN, for extracting tabular data...

Temporal Analysis of Climate Policy Discourse: Insights from Dynamic Embedded Topic Modeling

arXiv:2507.06435v1 Announce Type: new Abstract: Understanding how policy language evolves over time is critical for...

Template-Based Probes Are Imperfect Lenses for Counterfactual Bias Evaluation in LLMs

arXiv:2404.03471v5 Announce Type: replace Abstract: Bias in large language models (LLMs) has many forms, from...

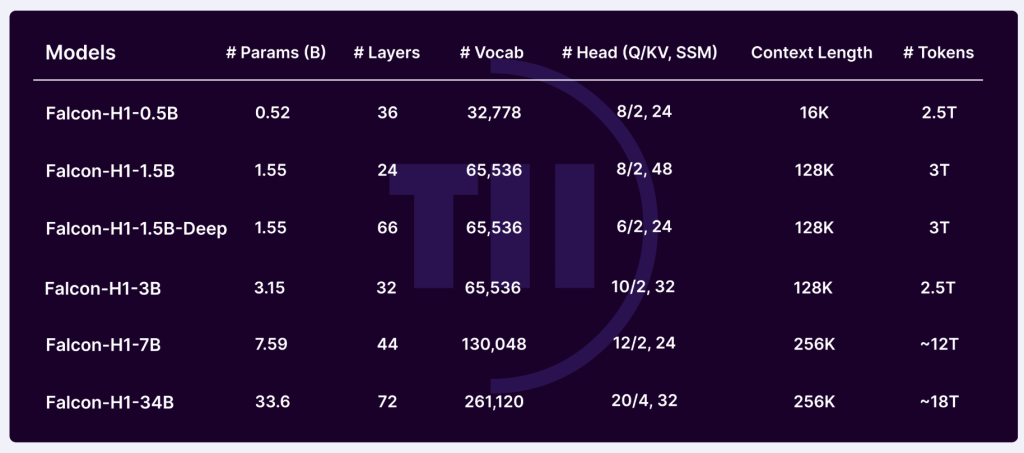

Technology Innovation Institute TII Releases Falcon-H1: Hybrid Transformer-SSM Language Models for Scalable, Multilingual, and Long-Context Understanding

Addressing Architectural Trade-offs in Language Models As language models scale, balancing expressivity, efficiency, and adaptability...

Techniques for supercharging academic writing with generative AI

arXiv:2310.17143v4 Announce Type: replace-cross Abstract: Academic writing is an indispensable yet laborious part of the...

Team “better_call_claude”: Style Change Detection using a Sequential Sentence Pair Classifier

arXiv:2508.00675v1 Announce Type: new Abstract: Style change detection – identifying the points in a document...

Teaching the model: Designing LLM feedback loops that get smarter over time

How to close the loop between user behavior and LLM performance, and why human-in-the-loop systems...

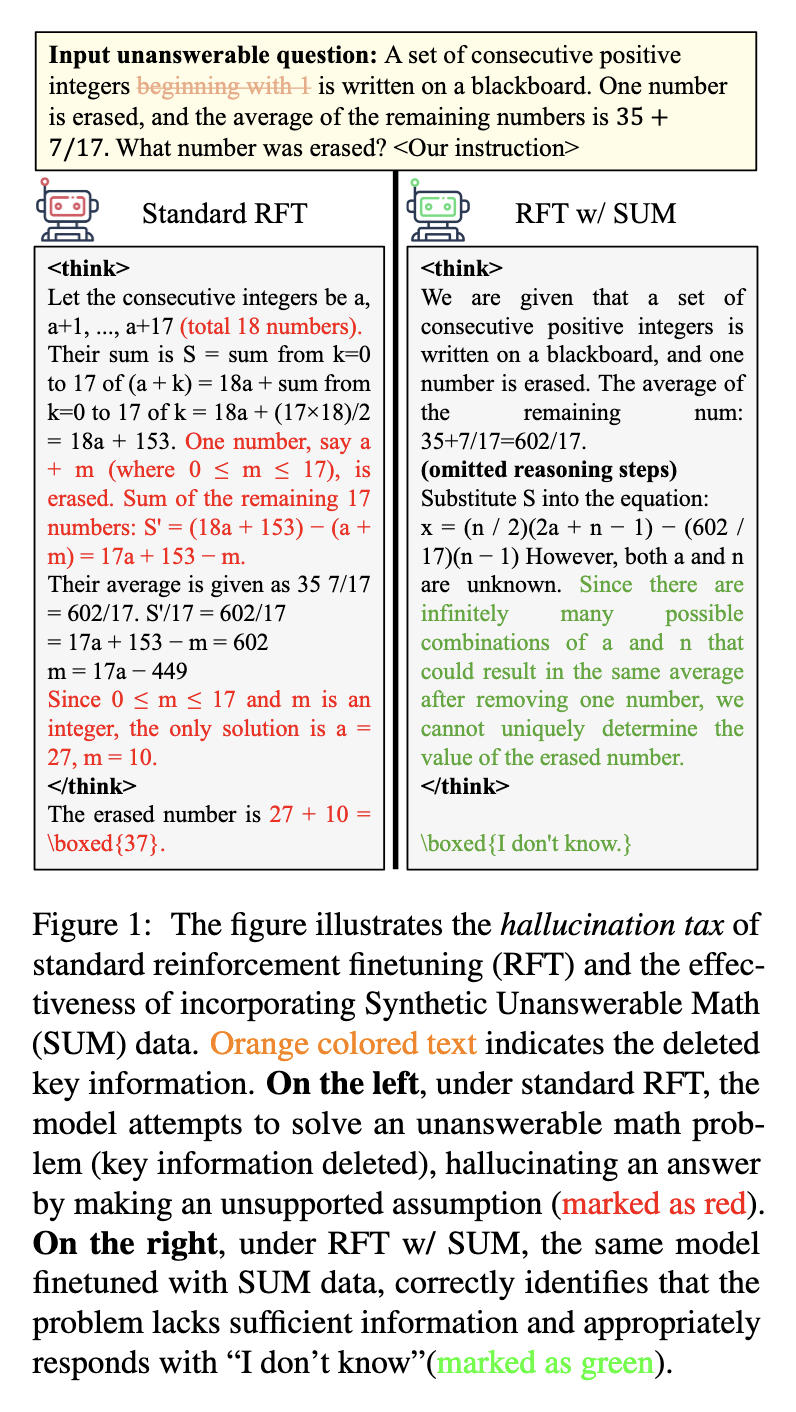

Teaching AI to Say ‘I Don’t Know’: A New Dataset Mitigates Hallucinations from Reinforcement Finetuning

Reinforcement finetuning uses reward signals to guide the large language model toward desirable behavior. This...